With the Oprah thing and the rather bizarre Maureen Dowd interview (ripe for parody), I thought I might as well throw my two cents in.

Actually, this article was actually the one that actually convinced me to write something, specifically this quote:

I used to think Twitter would never catch on in the mainstream because it’s somewhat stupid. Now I realize I was exactly wrong. Twitter will catch on in the mainstream because it’s somewhat stupid. It’s blogging dumbed down for the masses, and if there’s one surefire way to build something popular, it’s to take something else that is already popular and simplify.

To clarify, I think this is fundamentally wrong and completely misses the point. (As an aside, similar things were said about blogging when it started taking off. These comments were also fundamentally wrong in the same way.)

Now, for some context, even though I was a relatively early adopter (my first tweet – I believe it was still called twttr then with a snot-themed logo and a focus on SMS), that’s not to say my own understanding and thinking hasn’t evolved along with the service (and its audience)…

The first time Twitter really picked up on my radar was while I was in London, as it had gotten a fair amount of traction as a cheaper way to text. Along those lines, it took off, again as a “group chat” style tool the next year at SXSW as a way for friends to coordinate in a lighter-weight and less annoying way than Dodgeball. At this time, it was still focused around SMS delivery, although there were some interesting clients starting to pop up. Also around this time (post-SXSW) that my (and others’) focus turned upon looking at Twitter through the lens of ambient awareness (Clive Thomspon did a great writeup writeup last year) and what we began to refer to in conversation as “statuscasting” (a term, which I might have made up, but I assume must have been on the tip of everyone’s tongue). Then there was a big explosion in clients, mashups, and the use of Twitter as a “command line” interface. And, of course, through all of this, Twitter continued to build up steam in the way that social tools do, as waves of adopters and their networks jumped on board. While there has always been the dialectic between semi-private conversation and broadcast/publishing that continues to make Twitter really interesting, the trend arguably has been toward the latter (especially with the “collection” of followers).

Now with some context that hopefully hints at some level of complexity to the Twitter phenomena, here’s where I return to directly smacking down that original quote and offering an alternative interpretation…

Twitter isn’t “retarded blogging” anymore than blogging was “retarded long-form writing.” What blogging uncovered was a “web-native” sort of communication – one focused on links-both hyperlinks and permalinks, temporality (dated posts, reverse chronological order) and decentralized conversation – at first manually since people simply read each other’s blogs (when I started, it all fit on a single list), then later with comments and formalized through trackbacks, pingbacks, and dedicated aggregation tools. It took a while, but I believe that Twitter has revealed a communication style that is native to the “web” today. What is this web? It’s one filled with activity streams – the “social web” and the “continuous partial web,” and one that exists beyond the browser and beyond the desktop – the mobile web and the “widget web.” The ingestion characteristics of these media are focused around intermittent (but constant) bursts of attention and the ability to scan both gestalt and to track details, and the output is about the “in-between times” of other activities. You don’t sit around for an hour writing a tweet. In fact, most people start with time that otherwise would have been spent idling (hence the large proportion of airport complaint messages).

That, I suppose is one aspect of the quote that is right – Twitter is does have more mass appeal because it can take root by fill a vacuum rather than being an activity that requires active displacement (at least to begin with!). The point is that it’s high immediate reward with low incremental commitment. And of course, the innocuousness of that small text box is part of Twitter’s genius…

Now, if there is a better (or different) model, my suspicion is that it’s in finer scoping. Sure geeks like to talk about interop and decentralization (and while that may come as it did for email, it may not (like for IM)), but I think it’s ultimately less interesting than figuring out how Twitter (or a similar type of service/activity) ends up bifurcating or integrating the aforementioned pull between public and private (groups? targeted/typed messages? ).

I think that’s where already see some interesting things like how location services have splintered off, and I think that’s what Facebook is attacking – in the same way that it created a semi-private place for photo and online-discussion activity, it’s trying to do so for tweets as well.

For those that recall, this harks back to discussions on semipermiability (ironically semipermanant, here’s the archive of Joyce’s paper on that), which never really took off (again, a niche that Facebook expanded into, I think).

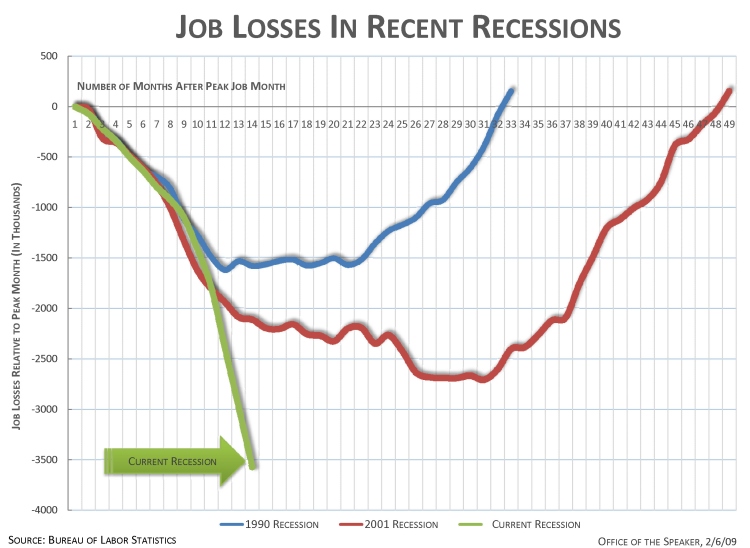

Well, there’s not much of a conclusion here. This is entitled random thoughts after all. Maybe two last things while I’m here for those who remember the milieu and impetus of blogging… Firstly, my friendfeed, which is currently aggregating my activity streams across over a dozen services, and second, a graph of my blog output over the past few years:

See also:

- Today, Jason Kottke wrote a great piece entitled In Defense of Twitter

- As a counterpoint, back in 2007, Kathy Sierra asked Is Twitter Too Good?